Natural Language Processing (NLPs), Generative Adversarial Networks (GANs), and other machine learning networks are intriguingly complex. In our previous blog, we discussed an introduction to GANs. In general, machine learning offers a plethora of opportunities. Specifically, they’ve shown potential in artwork generation and intricate data tasks. Such tasks were not feasible a few years back. So, let’s explore this fascinating world.

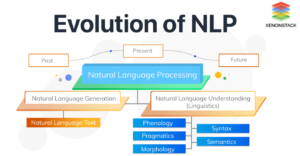

The graphical representation of NLPs may be shown below:

Graphical Representation of NLP

GANs and Natural Language Processing (NLP)

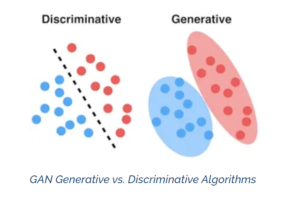

First and foremost, GANs and NLPs have a captivating relationship. GANs often focus on image generation. . For instance, GANs are advancing in text generation tasks. But NLP has its own intricacies. One of these is tokenization. In tokenization, text breaks down into smaller chunks or “tokens”. Tokenization is vital for understanding language. It’s also essential for processing it. Thus, when GANs and tokenization combine, new possibilities arise. They offer fresh avenues for text manipulation and generation. The graphical representation of GANs may be shown as below:

Image Credit: AlgoScale

The Role of Fine-tuning

Moreover, fine-tuning is vital in the world of GANs. It begins with a pre-trained model. Fine-tuning then adjusts the weights. This ensures the model aligns with specific tasks. Notably, this process is important for models trained on vast datasets. Therefore, fine-tuning gives GANs versatility by helping them cater to niche domains. They can adapt to diverse challenges.

Transfer Learning: A Leap Forward

On the other hand, transfer learning offers another approach to boost GANs’ efficiency. Essentially, transfer learning involves taking knowledge from one model and applying it to another. In the context of GANs, this implies leveraging pre-existing knowledge to quicken the learning curve. Moreover, combined with the power of tokenization from the NLP world, transfer learning can supercharge the capabilities of GANs in text-related tasks.

Applications Beyond Imagery

Traditionally, GANs were known for image generation. However, their capabilities are more extensive. As we discussed, they can do more. They can incorporate elements of NLP, like tokenization. This broadens their horizons. For instance, combining these elements can enhance text outputs. This was a long-sought goal in AI. Additionally, we cab fine-tune GAN models and tailor them to specific linguistic tasks. Therefore, the future is promising for GANs. It’s not just about visuals. It’s also about the vast domain of language.

Challenges on the Horizon

GANs offer enormous potential, but they also have challenges. One challenge is stability during training. Balancing the generator and discriminator is difficult. Integrating NLP tasks into GANs is another challenge. This is because NLP tasks are complex and GANs are not always well-suited for them. However, methodologies like transfer learning provide hope. Transfer learning can help GANs learn from existing models, which can make them more stable and better at NLP tasks.

Wrapping Up

Generative Adversarial Networks (GANs), though initially conceived for image tasks, have expanded their reach to NLP. Methodologies like tokenization, fine-tuning, and transfer learning are elevating the capabilities of GANs in NLP. As we progress, it will be enthralling to witness how GANs evolve and reshape the AI landscape. Indeed, the future of GANs, bolstered by these synergies, seems boundlessly promising.